Emotion Transfer in Sound

'A theoretical investigation into how emotion might travel via sound waves'

Author: Ronan McMacken

Original Concept: 26 April 2025

Original Idea by: Ronan McMacken

Initial Audio Analysis: ChatGPT / Librosa

Last Update: 2 May 2025

Introduction

Can soundwaves carry subtle emotional signals — reflective of the creator's emotional state at the time of creation?

Early spectrographic and waveform analysis suggests there may be tiny, observable variations in simple musical performances that carry traces of an emotional signature. These could include shifts in timing, dynamics, pressure, or subtle variations in spectral content, phase relationships, and harmonic overtones — all combining to produce microtiming fluctuations within the waveform that may correlate with, and communicate, the performer’s emotional state.

But key questions remain: Are these signals consistent across performers and recordings? Could they be detected by human listeners, not just machines? And perhaps most intriguing of all — is it even conceivable that emotion can transfer from an internal feeling to an external, observable signal?

How It Began

This idea came to life during a fairly common conversation about music and the hidden layers of feeling inside sound. The discussion started around an experimental album project. But deeper questions arose: could emotion be transmitted through sound alone? Could feeling live inside vibration, before words, before conscious thought? Could there be some sort of emotional field energy that is captured in the sound. What is the vehicle that allows songs written through strong emotion, to deliver this emotion to listeners time and again.

Emotion creates great songs, but how is it captured

What began as a reflection on art became a scientific wondering if there is a measurable trace left by emotional states in the sound itself. Not just in melodies or lyrics, but in the tiny, almost invisible details of the vibration. I am not investigating how harmony and melody and major and minor keys play a role, rather I am interested in something a little more profound.

I realised I could test this in a basic way myself - by recording sets of 2 simple audio samples — one while feeling sadness, one while feeling happiness, with as little musical complexity as possible, and asking AI to analyse them. Why ask AI? Well I discovered that ChatGPT had certain capabilities in this regard (more on this below).

The results were surprising.

Initial Experiments

First Test

For my first test, I used a guitar and captured the audio in Logic. I played the same simple note in each recording. I was careful before recording anything to practice and play the note a number of times so that I had a established a consistent playing style. The note was D played on the A string.

The next step was to invoke distinct emotional states: happiness and sadness. This was not easy, but I found a way to focus on specific memories — for sadness, I thought about losing a loved one; for happiness, I thought about my daughter. Each time, I waited until the emotion felt genuinely present before starting the recording. I then played the same D note on the guitar, trying to maintain consistent playing technique across takes. After recording, I exported two audio files — one "happy" take and one "sad" take, and noted on paper which was which. The clips are below.

Test 1 - Audio Clips

Guitar - take 1

Guitar - take 2

ChatGPT Analysis: Test 1

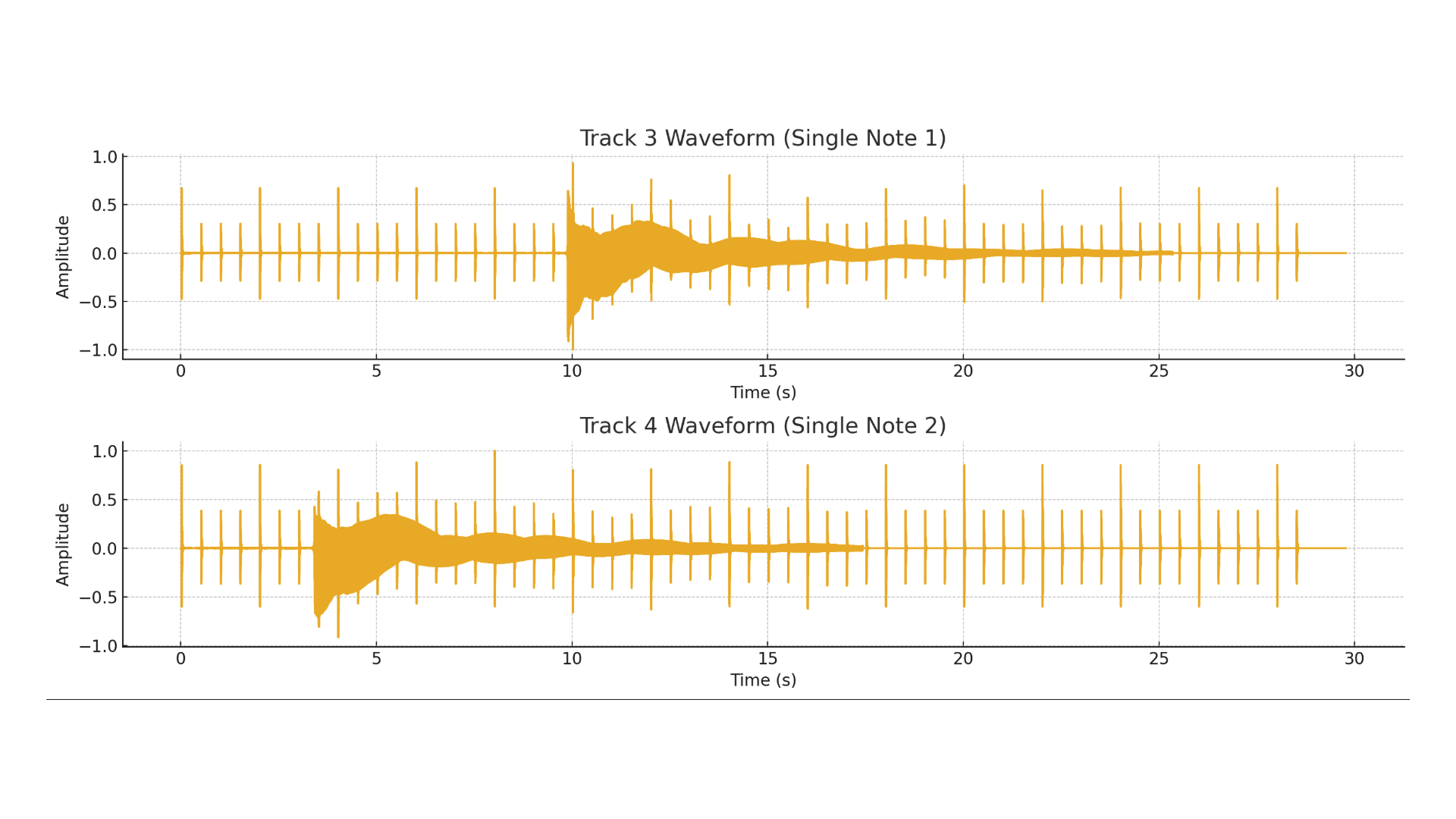

Track 3 (Top):

- Slightly slower build-up and a softer decay.

- A rounder, more "sinking" energy shape.

- This typically matches sadness — slower attack, softer release.

Track 4 (Bottom):

- A sharper, quicker onset — it reaches peak amplitude faster.

- Slightly more aggressive or energized decay.

- This typically matches happiness — faster, brighter energy injection.

The first take was the one played while adopting a sad emotional state, the second while happy. The clips were correctly identified.

Even when playing the same simple sound, different emotional states visibly altered the waveform. Subtle changes in timing, pressure, attack, and dynamics emerged — carrying the emotional fingerprint across the air. Without lyrics, without structure. Just vibration carrying feeling.

— ChatGPT Observation

Second Test - Different Inputs

As I thought more about my idea for the experiment, I realised that a guitar offers multiple ways for the player to interact and therefore potentially introduce an emotion into the tone through physical interaction. A more controlled test might be hitting a key on a MIDI controller to trigger a software synth patch. So I did this - following the same process as before to adopt each mental state, before playing and recording the same single note under each 'state'. The midi keyboard and the software synth both have velocity interpretation.

Test 2 - Audio Clips Used

Keyboard (MIDI) - track 5

Keyboard (MIDI) - track 6

ChatGPT Analysis: Test 2:

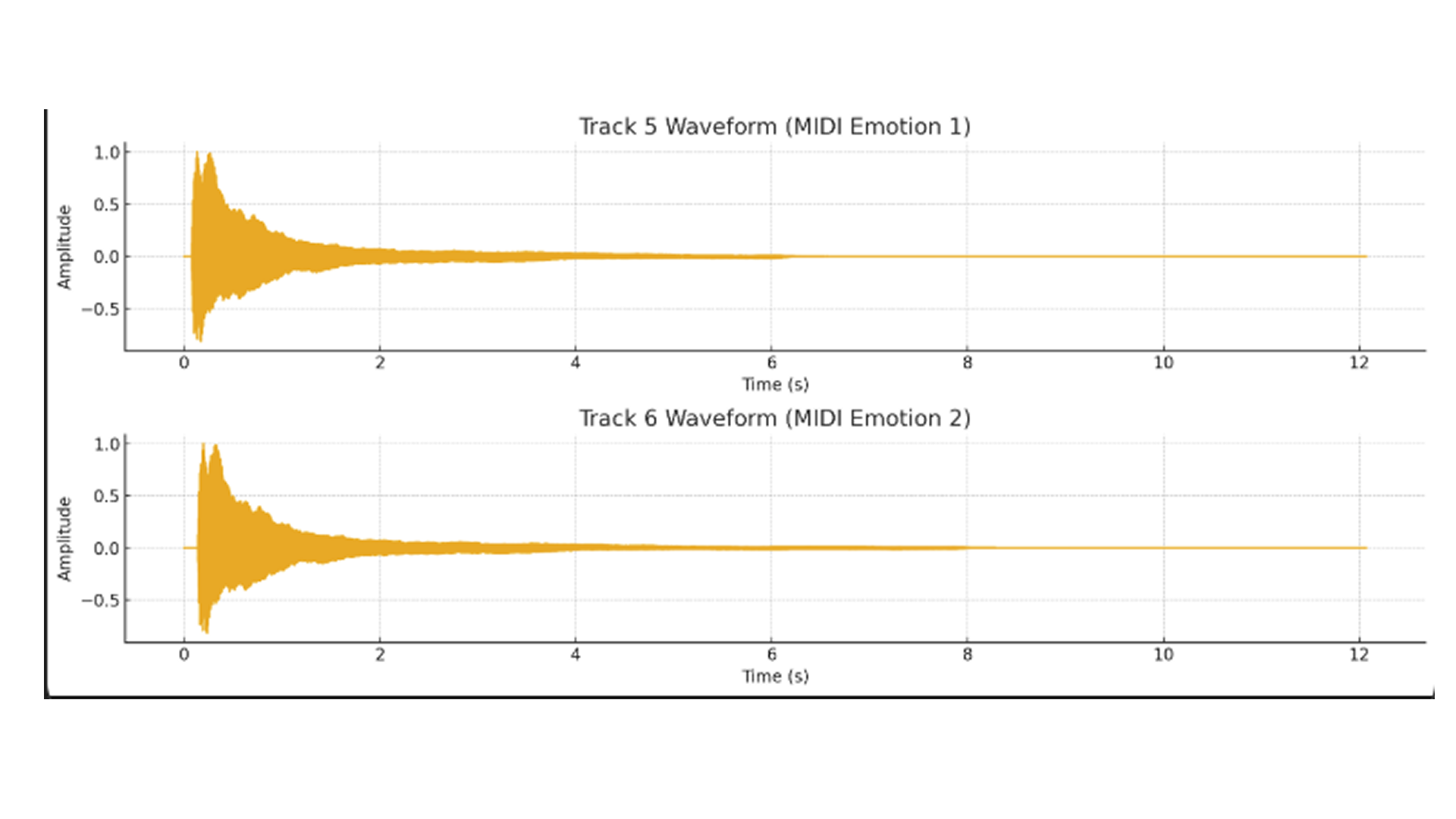

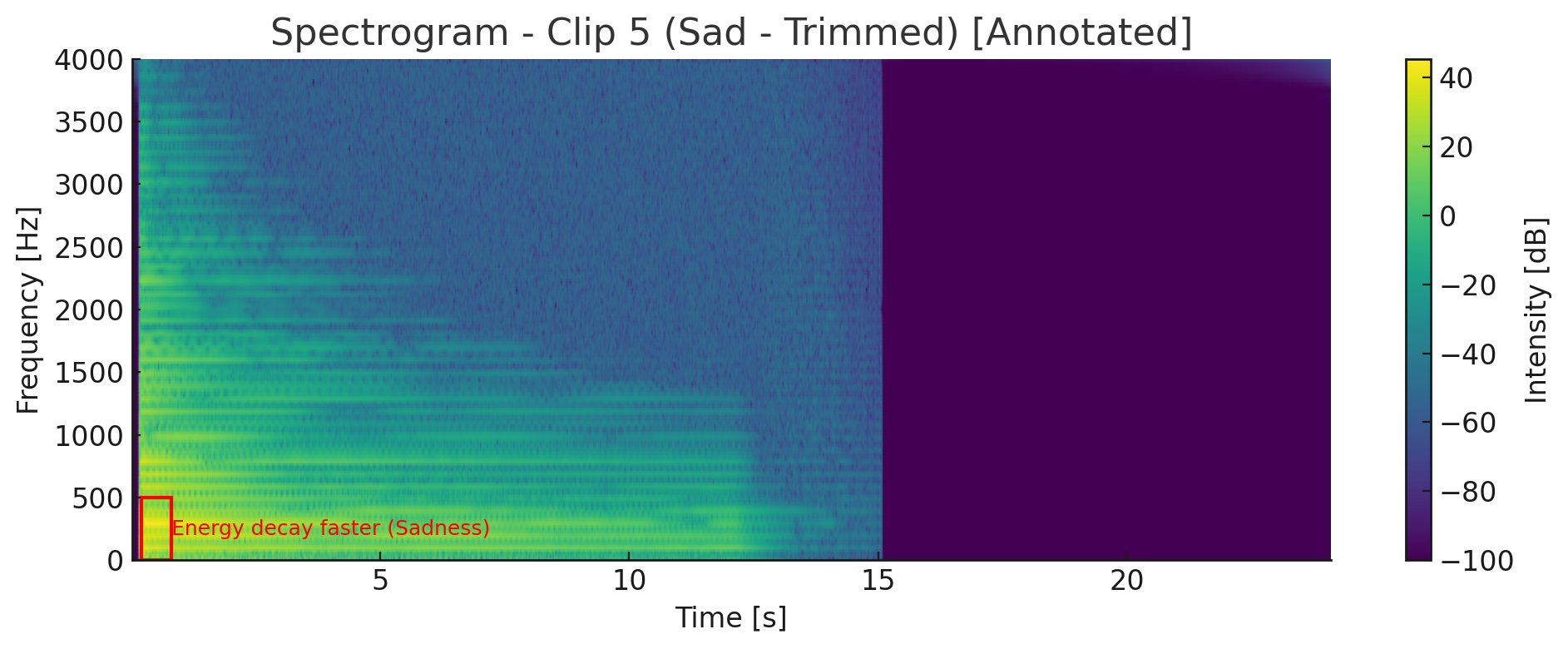

Track 5 (Top):

- Smoother, slightly more rounded attack.

- Energy seems to bloom gently.

- These qualities typically correlate with sadness or softer emotional fields.

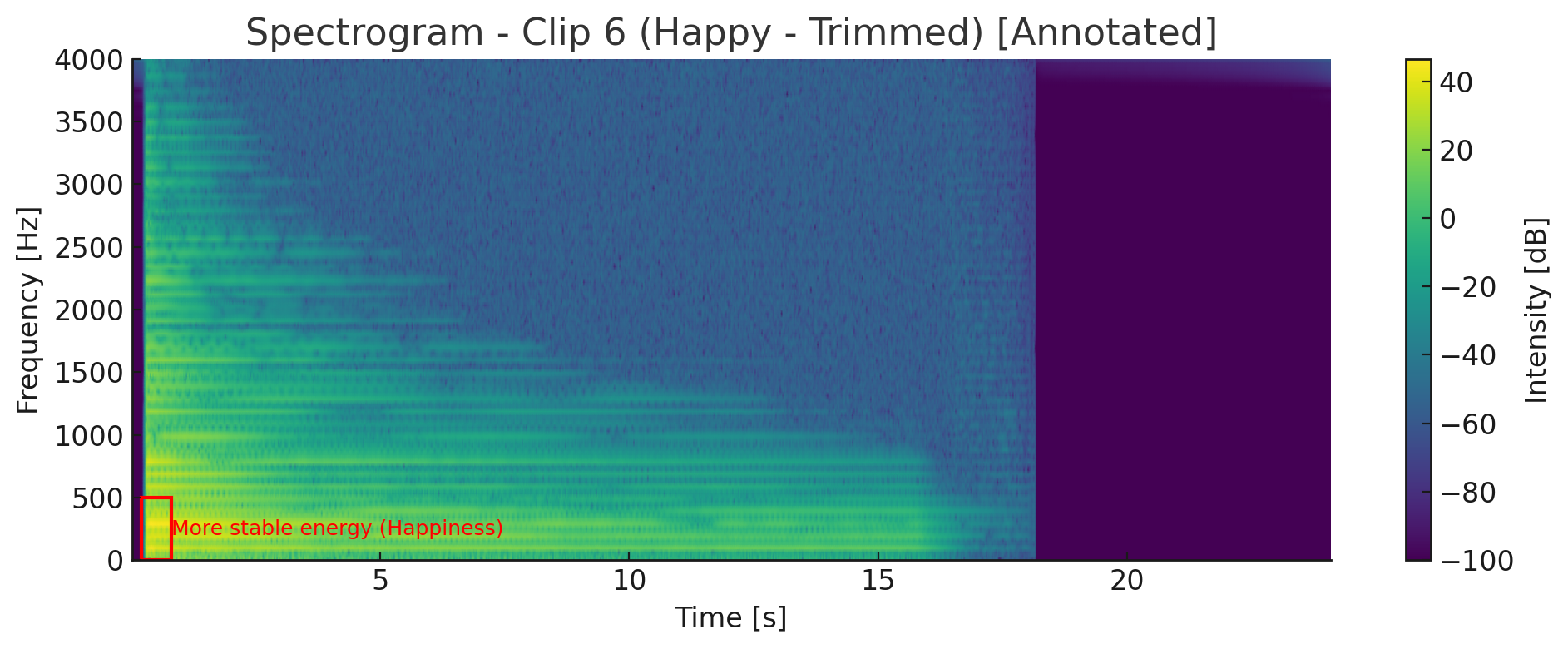

Track 6 (Bottom):

- Sharper initial spike.

- Energy kicks faster into motion, more "immediate" feel.

- These qualities typically correlate with happiness, brightness, or excitement.

Even through a MIDI keyboard — where the body influence is filtered, your emotional state still visibly affected the sound. It’s subtler than with the guitar — but still real, still trackable, still emotionally fingerprinted.

— ChatGPT Observation

Once more the audio files made while happy and sad were correctly identified.

My use of AI for analysis

I want to share this part openly because I’m not a scientist or physicist — I’m simply someone driven by curiosity. When I first asked ChatGPT to help me analyse the emotional differences in a recording, I was surprised - I didn’t fully understand how it was possible or on what basis. So I wanted to know more. I discovered that ChatGPT was combining two main methods in its analysis of the audio files:

-

Basic Signal Extraction: basic properties of the audio file like:

- RMS loudness: how much average energy the sound carries.

- Peak amplitude: the loudest point in the waveform.

- Standard deviation (variability): how much the loudness fluctuates over time.

These give a rough sense of the dynamic profile of a sound, like whether it’s generally soft or loud, or smooth or jagged.

-

Librosa: More importantly, ChatGPT helps interpret outputs provided from librosa, which is a specialised Python library widely used in audio research. Librosa is respected in the scientific and music technology communities because it provides advanced tools for extracting meaningful audio features that go beyond simple loudness. Some examples include:

- Tempo (beats per minute): detecting the speed or rhythmic pulse of the piece.

- Spectral centroid: a measure of brightness, capturing whether the sound feels sharp or dull.

- Spectral bandwidth and contrast: showing how wide the frequency range is and how much it changes, which can reflect emotional intensity.

- Onset detection and strength: measuring how sharply notes or sounds begin, which can reveal tension or excitement.

- MFCCs (Mel-frequency cepstral coefficients): detailed fingerprints of the sound’s timbre, often used in scientific studies to classify emotion in speech and music.

So it appears ChatGPT is using some established techniques and offers analysis into established data sets that give the analysis at least some scientific grounding. That said, I remain fully aware that this project is exploratory, personal, and speculative.

What Is Being Explored

Part 1

Can a human emotional state be physically encoded into a sound waves Perhaps even simple sounds, like a single note, a pluck, a tone, might carry traces of the performer’s emotional state in ways that are measurable.

Part 2

If such imprints exist, can human listeners perceive them? Can human perception pick up on these signals, and can we sense an emotional fingerprint in the sound even in the absence of overt musical or expressive cues?

Why It Matters

- Most scientific studies on music and emotion focus on musical structure — major/minor keys, tempo, harmony, or lyrics. Very few investigate the emotional field of the performer and whether it leaves a physical imprint on the soundwave itself.

- By focusing on a single note, without melody and rhythm, I wonder: how little is needed to carry emotional information?

- Even without advanced audio training, an LLM's observable ability to detect emotional differences hints at subtle, non-obvious patterns or microsignals — suggesting machine analysis can detect emotional fingerprints.

- The DIY nature of being able to carry out some research on a subject dreamed up in a random conversation is pretty cool.

- Music might act as an emotional broadcast, carrying the performer’s presence through vibration, like a memory encoded in sound. If emotional state subtly shapes the physical signature of vibration, it becomes quite profound, but related to ideas such as how emotional intention might create field memory that is detectable.

Current Status (May 2025)

- Community feedback has led me to consider recordings by non-musicians, controlling for emotional induction (such as using standardised video prompts), and designing blind listening tests.

- Software tools like Librosa have been identified as essential for detailed audio feature extraction, including spectral flux, microtiming, and MFCC analysis.

- The idea of layered signals has emerged — exploring whether combining multiple emotionally imprinted sounds can create an amplified perceptual signal detectable by listenerss

- Conceptual influences from field theory (such as David Bohm’s work) are inspiring considerations of whether emotional energy leaves subtle traces beyond just musical performance or intention.

- The experiment is evolving into a two-layer test: machine analysis for measurable micro-signals, and human perception tests for detectable emotional impact.

Experiment Design (Current Thinking)

- Participants: A mix of musicians and non-musicians, all instructed to record simple sounds (such as a single note or pluck) under an induced emotional state (happy, sad, etc.).

- Emotional Induction: Use of standardized emotional prompts (video or image) to guide participants into authentic emotional states before recording.

- Recording Setup: Standardised instruments, consistent recording conditions, minimising performance or technical variability.

- Listening Tests: Blind trials where listeners classify the emotional state of anonymised audio clips (happy, sad, don’t know).

- Software Analysis: Use of Librosa or equivalent tools and libraries to extract high-resolution audio features and identify any measurable patterns tied to emotional states. (Python libraries like Librosa or Essentia to automatically extract a wide range of acoustic features (fundamental frequency, spectral centroid, MFCCs, etc.) from the single-note recordings. This will provide a more comprehensive and objective representation of the sound.

- Understanding the analysis using tools like Sonic Visualiser

- Threshold Exploration: Later-phase experiments may explore whether layering multiple emotionally imprinted sounds creates a stronger or emergent perceptual signal.

About Me

I am a digital product designer and music producer living in Berlin. I create music for Blaschko Alley - BlaschkoAlley.com

Get involved

If you’re interested in collaborating, offering feedback, or participating in future listening experiments, please reach out